AI is no longer a set of experiments or isolated pilots sitting on the edge of enterprise operations and is now woven into the core of how modern organizations function. From customer decisions and financial approvals to logistics and fraud detection, AI systems have become essential components of day-to-day execution. In this new environment, companies must recognize a critical shift: AI is no longer just technology. It is infrastructure.

With this shift comes a new category of risk. As AI starts influencing decisions across operations, legal exposure, regulatory scrutiny, and reputational concerns are no longer theoretical. The stakes have increased, but most organizations have not adjusted how they manage responsibility. Ownership remains unclear, fragmented, or undefined.

AI Is Now Infrastructure, Whether You Call It That Or Not

AI systems have become deeply embedded into critical business processes across multiple industries. Financial institutions use AI to score loans and detect fraud, healthcare providers deploy AI models to assist with diagnosis and treatment planning, logistics and supply chain teams rely on predictive models to optimize routes and manage inventory, and HR departments are increasingly using algorithms to screen and evaluate job applicants. In all of these cases, AI is no longer experimental. It is part of the operating core.

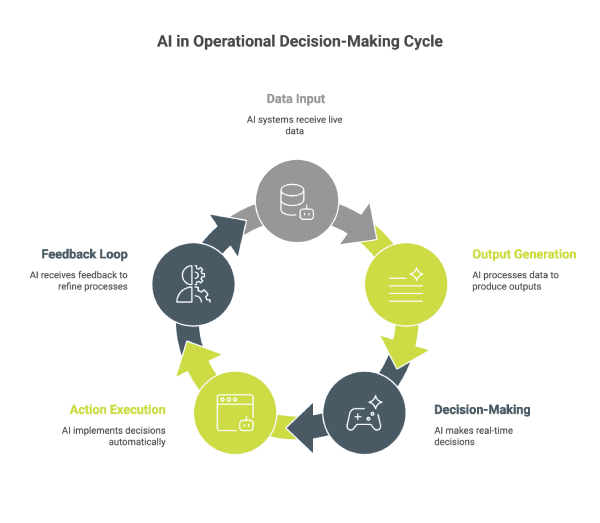

These systems are connected to live data, continuously generating outputs, and increasingly responsible for actions that would have previously required human oversight. They shape workflows in real time and operate behind the scenes without pausing to ask for approval. This is not just automation. It is operational decision-making happening at machine speed.

The Scope Of AI Risk Extends Far Beyond The Technology Team

As AI systems become infrastructure, the risk surface grows more complex. It is not enough to monitor technical performance. Leaders must consider how risk flows across functions and affects people, processes, and compliance. Technical risk includes performance degradation due to issues like model drift, faulty data inputs, or system downtime. These are the kinds of problems most data teams are trained to diagnose, but they are only one layer of the full picture.

Operational risk surfaces when AI systems interfere with established business processes. A system that flags a legitimate customer as fraudulent may trigger account freezes or service interruptions. A model that adjusts pricing based on demand signals could unintentionally breach regulatory thresholds or contradict manual discounting policies. These issues do not start with code, but with poor coordination between systems and stakeholders.

Legislation focused on AI fairness, explainability, and accountability is gaining traction around the world. The EU AI Act and emerging U.S. policy frameworks are early examples of how governments are preparing to regulate machine-driven decisions the same way they regulate financial controls or privacy practices. Reputational risk must also be considered. Organizations are increasingly held publicly accountable for the behavior of their algorithms. Whether the issue is discrimination, bias, or an opaque decision-making process, the damage to trust and public perception can be lasting.

Legal risk presents some of the most difficult questions. If an AI system used in hiring discriminates against protected classes, or a vendor’s model misclassifies medical conditions, who is liable? Is it the developer who built the model, the internal team who deployed it, the executive who approved the initiative, or the organization itself?

The answer is rarely clear unless the structure for accountability is clearly defined in advance.

Traditional Ownership Models Were Not Designed For AI

Most organizations still approach AI governance as if it were a subset of IT or data science. Projects are handed off between departments. Data scientists are responsible for model accuracy. IT ensures the systems are secure and available. Business units use AI outputs in their workflows, but rarely question or audit them. Legal and compliance teams are often brought in only after something goes wrong.

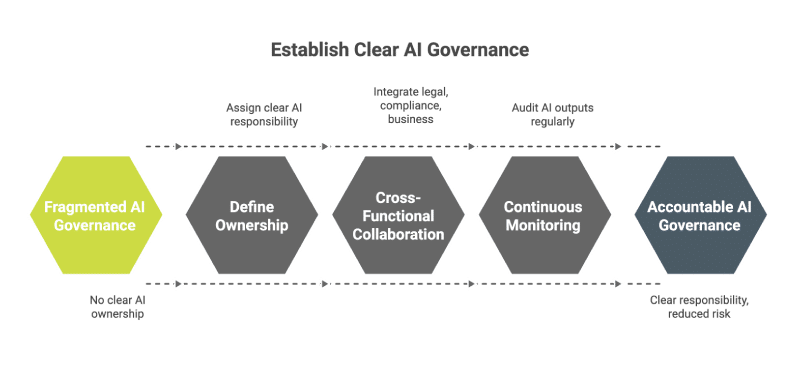

This fragmented approach leaves no one clearly accountable for the full risk profile of a system that now affects decisions across the enterprise.

AI sits at the intersection of several functions but is fully owned by none. This gap in ownership becomes visible when models produce unexpected outcomes. When an AI decision leads to harm or operational disruption, the question of responsibility is often answered after the fact, through internal investigation or external scrutiny.

Organizations Need A New Governance Structure Built For Scale

A modern AI governance model must reflect the reality that AI is operational infrastructure. That means oversight must be coordinated, ownership must be distributed, and risk must be managed like any other enterprise-level concern.

One of the most effective ways to establish this is through the formation of a formal AI governance council. This group should include senior leaders from technology, security, legal, compliance, operations, and the business units deploying AI tools. Its role is not to approve every project or slow innovation, but to set the guardrails that allow systems to scale responsibly.

Ownership must also be clearly defined at multiple levels:

- Model performance should be owned by the teams who design and monitor the algorithms

- Data quality and pipeline reliability should sit with the teams responsible for system integration and platform management

- Business units should own the operational consequences of AI systems embedded into their workflows

- Legal and compliance teams should provide oversight for risk, ethics, and regulatory alignment

A centralized AI risk register allows organizations to document where AI is used, what risks are associated with it, and who is responsible for managing those risks. Regular decision audits should be conducted to review how AI outputs are being used, overridden, or misunderstood in real-world settings.

Third-Party AI Tools Require Just As Much Oversight

The complexity of risk management increases when AI is delivered by vendors or embedded in external platforms. Many organizations rely on software that includes AI features, but rarely scrutinize those capabilities with the same diligence they apply to internal systems.

Procurement and legal teams must begin treating AI-powered platforms like infrastructure. Contracts should include clauses that define what happens if an AI system fails, how models are retrained, how performance is monitored, and who is responsible for errors or bias.

In addition to legal safeguards, organizations should adopt onboarding processes that bring legal, IT, and risk into the decision-making process for any third-party AI solution. If the vendor cannot explain how their model works or demonstrate governance maturity, it may not be ready for enterprise deployment.

Make AI Risk An Operational Responsibility

Orases help organizations turn AI from a source of risk into a competitive asset. We work with executive teams to build scalable governance frameworks that assign ownership, align with regulations, and integrate risk thinking into every phase of the AI lifecycle.

If your business is moving toward AI as infrastructure, let’s start a conversation about how to manage it the right way.