Executives often enter AI initiatives expecting a direct line to ROI when they deploy cutting-edge models, integrate them into operations, and wait for transformation. But more often than not, the results fall short and not because the models are flawed, but because the decisions that drive value creation remain unchanged.

AI doesn’t generate ROI in isolation, it creates leverage only when the organization restructures how decisions are made, by whom, and with what confidence. If the same human bottlenecks and trust gaps persist post-deployment, the ROI you’re expecting will stall at the gate.

To achieve measurable impact, enterprises must move beyond pilot-phase thinking and begin rewiring their decision infrastructure for speed, automation, and accountability. This article lays out the structural shifts necessary to make AI truly operational and valuable.

The Myth Of Plug-and-Play ROI

The most common misstep in AI adoption is treating it like a standard IT upgrade: install the model, integrate the interface, collect the ROI. But unlike ERP systems or cloud platforms, AI doesn’t operate on fixed logic. It functions probabilistically, making recommendations based on patterns and likelihoods.

Executives often invest heavily in prediction systems forecasting demand, detecting fraud, scoring leads, yet see little difference in output quality or speed. Why? Because the surrounding decision workflows haven’t evolved. Insights go unused, or are overridden by manual processes driven by habit, gut feel, or politics.

Where Decision-Making Breaks AI

AI-driven lead scoring becomes irrelevant when sales teams ignore it. Inventory optimization fails when procurement sticks to old heuristics. Even high-performing risk models get overridden by legal teams unsure how to interpret the output.

What we’re seeing is a decision chain that hasn’t kept pace with the intelligence supporting it.

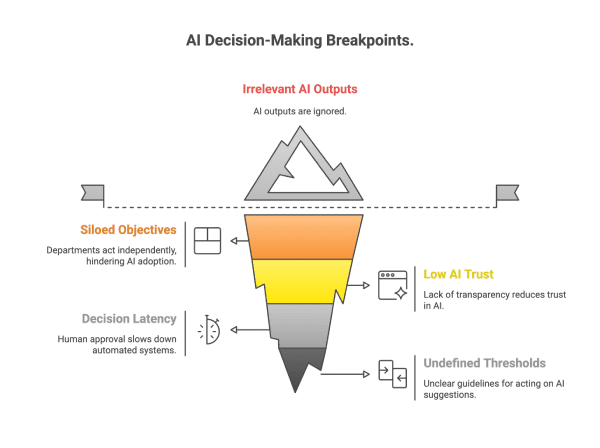

Here are the common breakpoints:

- Gatekeeping behavior by departments with siloed objectives

- Low trust in AI outputs due to lack of transparency or training

- Decision latency, where automated systems still require multi-step human approval

- Undefined thresholds for when to act on an AI suggestion versus escalate

The New Decision Architecture Required For AI

To unlock real ROI, organizations need to restructure how decisions are made. This means redefining decision rights, speed, and trust levels in the context of AI.

Start by distinguishing between:

- Human-in-the-loop: AI supports the decision, but humans make the final call.

- AI-in-the-loop: AI makes the decision within defined boundaries, escalating only when edge cases occur.

For example:

- A pricing model suggesting real-time discounts needs pre-cleared latitude, not a manual review queue.

- An AI-powered fraud detection system must have escalation criteria baked in, rather than waiting for human validation every time.

This transition requires not just policy changes, but cultural shifts in trust, accountability, and governance. It’s a move from seeing AI as a tool to seeing it as a participant in decision-making.

Operationalizing AI-Driven Decisions

So how do organizations actually make this work?

Build Cross-Functional Decision Teams

Bring together data scientists, business operators, compliance, and IT. Decision bottlenecks often exist between functions, not within them.

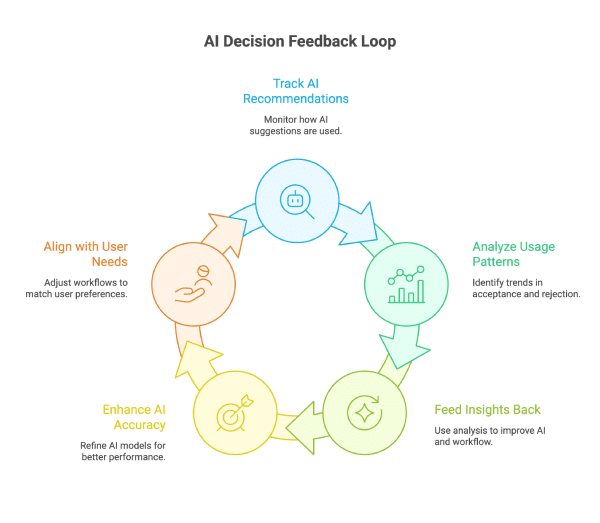

Establish Decision Feedback Loops

Over time, these feedback loops become critical to building organizational trust in AI systems. When overrides occur, they should not be treated as simple exceptions but as learning opportunities that reveal gaps in the model’s context or the workflow’s constraints. Capturing both the reason for the override and the downstream impact allows teams to distinguish between necessary business judgment and avoidable friction.

This creates a more adaptive system, where the model evolves alongside policy changes, shifting priorities, and new inputs, rather than falling out of sync with the business it was designed to support.

Define Escalation Thresholds

Not all decisions need human oversight. Create rules around confidence scores, impact thresholds, or risk levels that allow automated decisions within safe boundaries.

Instrument Your Decisions

Every overridden AI recommendation should be logged and audited. What was the business outcome? Did the override help or hurt? Over time, this data becomes the backbone for continuous ROI improvement.

Train For Interpretation, Not Programming

Business leaders don’t need to code but they do need to understand what a 72% confidence score means, or how model drift might affect quarterly projections.

This is the infrastructure of AI-enabled decision-making and without it, even the best models will stall in operational limbo.

AI ROI Doesn’t Start With The Model

Before your next AI investment, don’t ask what the model can predict. Ask what decisions the organization is struggling to make.

Where are the most expensive, error-prone, or slow-moving decisions happening? Where is human judgment weakest or most inconsistent? Where are delays leading to lost revenue, increased risk, or customer churn?

AI modernization is a multiplier. But it only multiplies what’s already structured to be acted on. If your current processes aren’t decision-ready, no model will save you.

Ready To Align AI With Real Business Outcomes?

Orases works with executive teams to modernize not just their technology but their decision infrastructure. If you’re investing in AI but not seeing the returns, we can help uncover where your systems, teams, and workflows are blocking value.

Let’s talk about how to turn AI from a technical project into a strategic advantage. Reach out to schedule a consultation.