The Guide For Advanced Web Application Development Strategies

Learn advanced strategies for modern web application development that scale, perform, and adapt to future needs.

Web application development has changed significantly over the years, shifting from static websites to highly interactive and data-driven platforms that drive modern business operations.

Organizations must now prioritize performance, usability, security, and scalability while adapting to ongoing innovations and shifts in technology. As web applications handle increasingly complex workflows and higher volumes of users, development strategies must align with both technical demands and broader business objectives.

Users expect fast, reliable applications that perform seamlessly on desktops, tablets, and smartphones, regardless of network conditions. Meanwhile, businesses require scalable architectures that maintain security against emerging threats and integrate innovations like artificial intelligence and machine learning to enhance functionality.

To stay ahead of the curve, this guide examines advanced web application development strategies that enhance performance, fortify security, and support long-term adaptability.

What Does “AI-Ready” Data Mean?

Data that is considered AI-ready meets a specific set of standards designed to support the demands of modern artificial intelligence systems.

It must be structured in a way that allows algorithms to interpret it without additional transformation, and it should be complete enough to represent the full range of inputs and patterns required by a given model. Quality still matters, of course, but instead of perfection, the focus shifts to relevance, context, and completeness in relation to the AI use case at hand.

Having well-managed metadata, consistent labeling, and standardized formats all play a respective role in making data interoperable with AI platforms and cloud-based infrastructure.

Data also needs to be accessible across systems while maintaining strict controls over who can use it, how it is used, and where it flows. Compliance with regulations such as GDPR or HIPAA is a baseline requirement, particularly as AI initiatives touch sensitive or regulated domains.

Rather than treating AI-readiness as a blanket condition, the readiness of data must always be evaluated based on how it will be used. A dataset suitable for training a simulation model might be entirely different from what is required for a generative language model, even within the same organization.

01

Step 01

Assessment Of Current Data Landscape

Web applications must deliver a seamless experience across devices and network conditions. With mobile traffic accounting for 58% of global web usage, businesses must have development strategies that prioritize performance, accessibility, and usability on smaller screens.

Progressive web applications and mobile-first development address these demands by improving speed, engagement, and adaptability.

Users expect web applications that load quickly, work offline when needed, and deliver a smooth experience regardless of location or device. PWAs offer app-like functionality without requiring downloads from an app store, while mobile-first development prioritizes optimization for smaller screens before scaling to larger devices, resulting in responsive and efficient applications.

Combining these two strategies improves performance, simplifies development, and increases user retention.

What Are Progressive Web Applications?

PWAs merge web and mobile app functionality, delivering faster, more reliable experiences directly through a browser to users.

Modern web APIs, including Service Workers and Web App Manifests, allow PWAs to work offline, send push notifications, and be installed on a device without requiring users to go through an app store. These capabilities make them an effective alternative to native applications.

Unlike traditional web applications, PWAs ensure uninterrupted access in low-connectivity environments by preloading and storing essential content. Faster load times enhance usability, reducing bounce rates and keeping users engaged. Push notifications further drive interaction by delivering updates and promotions directly to users.

PWAs also help cut down on development complexity since a single codebase works across multiple platforms, removing the need to build separate apps for iOS and Android.

Updates deploy instantly through the browser, bypassing app store approval delays, which simplifies maintenance and reduces costs. Businesses that adopt PWAs can cut development costs, improve maintenance efficiency, and deliver a consistent experience across devices.

Leading brands have already demonstrated that PWAs drive higher engagement by delivering seamless, app-like experiences on the web. The Twitter/X Lite PWA is able to lower average data usage by up to 70% while also improving load times, making it more accessible in areas with slow connections.

Meanwhile, Starbucks introduced a PWA for online orders, doubling daily active users while bringing desktop engagement in line with mobile app performance. These results highlight the benefits of a web-first approach to application development.

Orases successfully developed a web-based Healthcare Technology Management (HTM) Levels Guide for AAMI, transforming their assessment process into an interactive, user-friendly digital tool that dramatically reduced processing time while increasing user engagement and assessment completions.

Benefits Of Mobile-First Development

Starting with mobile design establishes a strong foundation for web applications, allowing for smooth scaling to larger screens.

Since Google ranks mobile-friendly sites higher, applications that don’t cater to smaller screens may struggle with search visibility. Mobile-first development improves accessibility and usability while directly impacting discoverability.

A bad mobile experience can cost businesses more than just a visit; it can lead to lost users who won’t return. Research from Google shows that 61% of users don’t return to a website that is difficult to get around on when using a smartphone.

Development factors, including slow load times, cluttered interfaces, poor designs, and unresponsive elements, can all reduce engagement and increase abandonment rates. Adopting a mobile-first approach to development avoids these issues by focusing on speed, simplicity, and usability from the start.

There are several design principles that serve to improve mobile functionality. For instance, fluid grids and flexible layouts allow content to adapt to different screen sizes, preventing elements from appearing distorted or misaligned.

Touch-friendly interfaces improve interaction by making buttons and menus easier to use on small screens. Proper content prioritization can help remove unnecessary distractions, keeping the interface streamlined and focused on what users need most.

Orases modernized Atlas Home Energy Solutions’ audit process with a digital application that slashed proposal delivery times and enabled business growth.

Creating Seamless User Experiences Across Devices

Users frequently switch between devices, often beginning an interaction on a phone and completing it on a desktop. Having a consistent experience across platforms improves usability, strengthens engagement, prevents frustration, and retains users.

Responsive design plays a major role in maintaining consistency for the user, meaning layouts, fonts, and images should automatically adjust based on screen size without requiring separate versions for different devices. A familiar interface across mobile, tablet, and desktop environments prevents confusion and improves efficiency.

Data synchronization is another important factor to keep in mind, as users tend to expect applications to remember their progress regardless of the device they’re using.

Features such as cloud-based state management allow information to be saved in real time so that actions taken on one device reflect instantly on another. Single sign-on or SSO simplifies authentication by allowing users to log in once and access their data across multiple platforms without having to re-enter credentials.

Beyond focusing responsive design and synchronization, businesses must carefully consider how their applications function in a broader digital ecosystem. Omnichannel strategies work to unify web, mobile, and in-store interactions, allowing users to transition smoothly between platforms.

Users should be able to browse products on a website, add items to their cart, and seamlessly complete their purchase later through a mobile app without losing their selections. Ensuring a smooth, uninterrupted experience across all touchpoints enhances user engagement, increases satisfaction, and improves long-term retention

Orases created a custom inspection app for Safety On Site that simplified compliance, reduced insurance costs, and improved safety processes in the logging industry.

Optimizing For Performance & Load Times

The speed of a web application directly affects usability, engagement, retention, and search rankings.

Users begin abandoning sites when load times exceed three seconds, making proper performance optimization essential to development. A high-performing, fast application keeps users happy while improving SEO rankings.

Efficiency-enhancing methods, such as compressing images, enable web pages to load faster while also keeping visuals sharp and engaging.

Minifying CSS and JavaScript cuts down on unnecessary characters, reducing the amount of data that browsers need to process. Asynchronous loading prevents large scripts from delaying content rendering, allowing the most important elements to appear quickly.

Caching mechanisms significantly enhance performance. An example of this is Service Workers enabling offline functionality by storing frequently accessed data, in turn reducing reliance on repeated server requests.

Content delivery networks (CDNs) distribute assets across multiple geographic locations enabling users to retrieve information from the closest available server. Faster access to resources minimizes latency, improving responsiveness.

Regular performance monitoring helps identify bottlenecks before they affect users. Tools such as Google Lighthouse analyze page speed to help developers find areas that can be worked on and improved. Ongoing optimization keeps applications running efficiently while adapting to changing requirements.

Combining these strategies together creates a web application that is fast, responsive, and reliable. Speed, usability, and consistency help drive engagement and retention, making them necessary considerations for any modern development approach.

02

Chapter 02

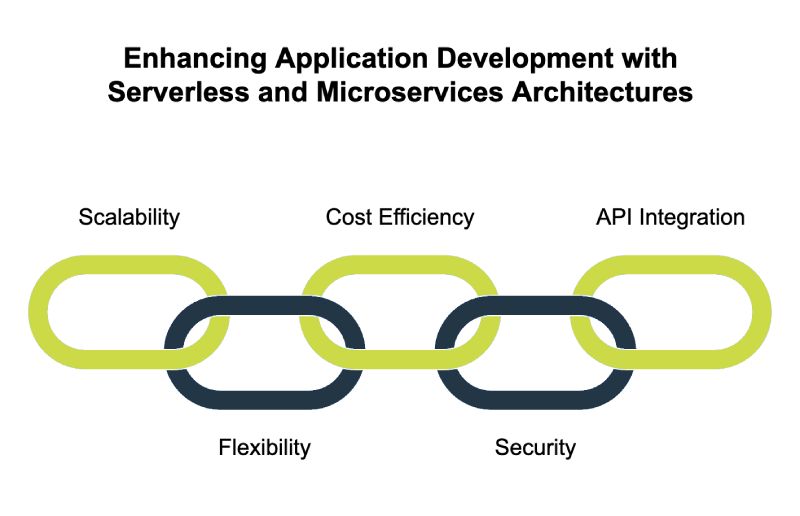

Serverless Architecture & Microservices

Traditional monolithic applications have been a cornerstone of web development, but they frequently pose challenges in scalability, adaptability, and maintenance.

Serverless computing and microservices offer an alternative approach that simplifies infrastructure management, improves deployment speed, and provides greater adaptability for modern applications. These architectural models allow businesses to scale efficiently while reducing operational overhead.

Without the burden of server maintenance, developers using serverless computing can prioritize innovation and efficiency in application development. Meanwhile, microservices break applications into smaller, independent components, helping teams to develop and deploy features separately.

Together, these approaches support applications that handle high traffic, adapt quickly to demand, and maintain long-term reliability with minimal infrastructure concerns.

Serverless Architecture Explained

Serverless architecture allows applications to operate without requiring developers to manage physical or virtual servers.

Cloud providers such as AWS, Microsoft Azure, and Google Cloud handle server provisioning, scaling, and maintenance, allowing developers to focus entirely on writing and deploying code. Functions execute on demand, responding to HTTP requests, database changes, background tasks, or similar events.

Serverless computing eliminates the need for manual scaling, as it automatically provisions resources to match workload demands. With these real-time adjustments, applications consume only what they need, preventing resource waste and removing the burden of manual scaling.

A pay-for-what-you-use pricing model provides further efficiency. Unlike traditional servers that charge for reserved capacity, serverless applications incur costs only when code executes. This makes the model cost-effective for applications with unpredictable traffic patterns, reducing expenses when demand is low.

Reducing infrastructure maintenance is another advantage. Server management, patching, and updates are handled entirely by the cloud provider, freeing developers from operational concerns. Applications remain available and secure without the need for manual intervention, streamlining development cycles.

Serverless computing is particularly effective for event-driven applications where functions trigger automatically based on specific actions, and real-time data processing, API backends, and scheduled tasks all benefit from this approach. Chat applications, image processing services, and IoT systems frequently use serverless functions to handle bursts of activity without requiring dedicated servers.

Despite its benefits, serverless computing is not ideal for every workload. Long-running processes, applications with complex state management, and workloads requiring consistent performance may face limitations.

Latency-sensitive applications may suffer from cold starts, during which, dormant functions take extra time to initialize before execution. Businesses evaluating serverless architecture must weigh these considerations against the advantages of cost savings and scalability.

When To Use Microservices Over Monolithic Applications

Microservices architecture breaks applications into independent, function-specific services that communicate via APIs, allowing for greater flexibility in development, deployment, and scaling.

Unlike monolithic applications using a single codebase that controls all functionality, microservices distribute workloads across multiple self-contained components.

Scalability is a major strength of microservices, as independent services allow businesses to scale specific functions without overprovisioning the entire system. A streaming service, for example, may scale its recommendation engine separately from its user authentication system, optimizing performance and cost efficiency.

Netflix is a well-known example of a company that migrated to microservices, allowing different features, such as video playback, content recommendations, and billing, to operate independently without affecting the entire platform.

Faster development cycles and easier updates also make microservices an attractive choice to utilize in an app. Parallel development in a microservices architecture eliminates dependencies that slow down releases, making deployment more agile.

Adopting a microservices approach allows organizations to deploy changes to one part of the application without requiring a full system update, decreasing downtime and improving agility.

Fault isolation provides some additional stability, ensuring that an error in one service does not disrupt the entire application.

A failure in the notification service, for example, wouldn’t disrupt the checkout process in an e-commerce application. Fault isolation improves reliability, making applications more resilient to unexpected failures.

Despite these advantages, microservices also introduce new challenges. For example, managing multiple services requires additional infrastructure, including API gateways, service discovery mechanisms, and monitoring tools that must be implemented.

Deployment and orchestration become even more complex as the number of services increases over time. Businesses must assess whether the benefits of flexibility and scalability outweigh the operational complexity before shifting to a microservices model.

Challenges With Serverless & Microservices

While serverless computing and microservices improve scalability and flexibility, they introduce complexity in deployment, monitoring, and communication. Running multiple independent services or functions requires additional coordination, increasing the need for well-defined architecture and operational management.

Handling multiple services creates challenges in monitoring and debugging. With a monolithic system, all logs and errors are contained in one place, making troubleshooting more straightforward.

Microservices and serverless applications distribute functionality across different components, requiring centralized logging and distributed tracing to track failures. Without proper observability, diagnosing issues across multiple services becomes difficult.

Latency concerns arise in serverless environments due to cold starts. When a function is inactive for a period, the first execution experiences a delay as the cloud provider initializes resources.

While caching, provisioned concurrency, and optimizing function execution times can reduce this issue, applications requiring consistently low-latency responses may face performance challenges.

Network communication between microservices introduces another layer of complexity. Unlike monolithic applications, where function calls occur within the same process, microservices rely on API calls over the network.

This setup increases the potential for delays, failures, and security vulnerabilities. Using service meshes, implementing circuit breakers, and optimizing API calls are strategies to help manage these risks.

Security must also be considered when deploying serverless functions and microservices. Each function or service requires its own authentication and authorization, increasing the risk of misconfigurations that could expose sensitive data.

With strict access policies, encryption protocols, and routine security audits, distributed applications remain protected against the latest threats.

While these challenges introduce additional overhead, careful architectural planning and the right tools help mitigate risks. Having well-structured monitoring, efficient API design, and automation in deployment processes improve stability and performance in distributed environments.

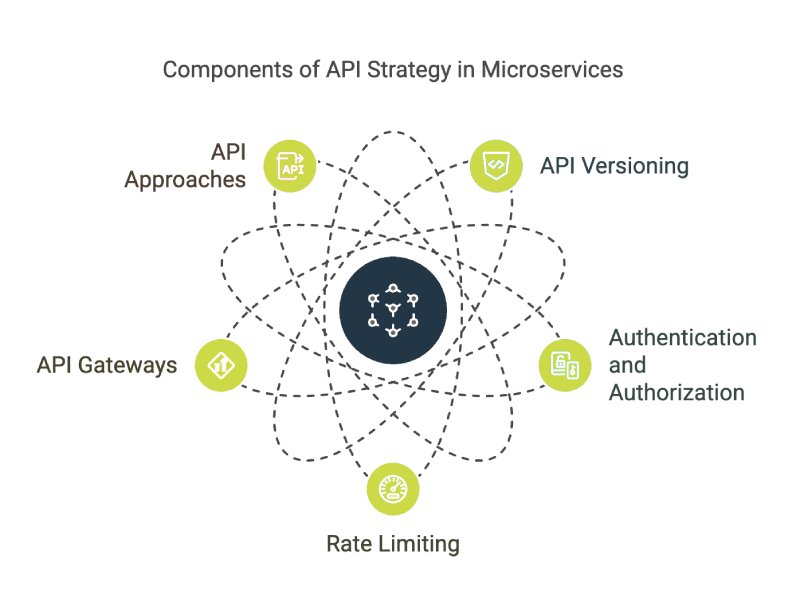

Integrating Microservices & APIs For Modular Systems

Steady communication between microservices relies on well-structured APIs that allow independent components to exchange data efficiently.

Having a clear API strategy helps maintain performance, security, and manageability of the app as different services interact. Designing APIs with proper versioning prevents compatibility issues when making updates.

Instead of breaking existing functionality, API versioning allows applications to introduce changes without disrupting consumers. Versioning strategies include URL-based versions (e.g., /v1/orders vs. /v2/orders) or using headers to indicate requested API versions.

Authentication and authorization play a major role in securing microservices. Proper identity management, including OAuth2 or JSON Web Tokens, controls access to APIs. Role-based access policies ensure only the appropriate services and users interact with specific endpoints, reducing the risk of unauthorized data exposure.

Rate limiting protects APIs from abuse by restricting the number of requests per user or service within a given timeframe, preventing an excessive load from overwhelming individual microservices and enhances overall system stability. API gateways, such as AWS API Gateway and Kong, manage authentication, rate limiting, and request routing for microservices-based applications.

GraphQL and REST represent two common approaches to API communication. RESTful APIs provide a standard structure for data exchange, relying on HTTP methods such as GET, POST, and DELETE. With its flexible query structure, GraphQL delivers only requested data, reducing bandwidth consumption and improving application performance.

Choosing the right API approach depends on application requirements, with REST offering simplicity and GraphQL providing greater flexibility for complex data queries.

API gateways serve as intermediaries between microservices by handling request routing, security enforcement, and performance optimizations. TKong, NGINX, and similar tools provide centralized management for API traffic, improving scalability and reliability.

Having a well-structured API strategy in place helps make sure that microservices communicate effectively while maintaining security and performance. Thoughtful API design, combined with strong access controls and monitoring, supports scalable and efficient modular applications.

Effectively communicating the needs and requirements of a custom web application to a custom software application development team can be a difficult process, especially for those who aren’t exactly tech savvy. User Stories are used as an effective tool to promote transparency, project requirements u0026 confirmation of feature goals among team members and stakeholders. Learn how to create User Stories by downloading our e-book here!

03

Chapter 03

Web Application Security & Advanced Authentication

Cyberattacks on web applications have greatly increased in frequency, scope, and sophistication in recent years. Attackers tend to routinely target vulnerabilities such as SQL injection, cross-site scripting or XSS, and broken authentication mechanisms to steal sensitive data or compromise systems.

Security breaches result in financial losses, regulatory penalties, and damaged reputations, making it essential for businesses to implement effective security measures.

Having strong authentication protocols, secure coding practices, and proactive testing help prevent unauthorized access and data leaks. Addressing security at every stage of development reduces risks and strengthens application integrity.

Best Practices For Secure Web Application Development

Cybercriminals often exploit common weaknesses in web applications to gain unauthorized access to systems.

The OWASP Top 10, a widely recognized list of security risks, outlines the most frequent and damaging threats, including injection attacks, broken authentication, security misconfigurations, and inadequate access controls. Developers must incorporate protective measures to defend against these vulnerabilities.

SQL injection and similar attacks occur when applications don’t properly sanitize input, giving attackers control over database queries as a result.

Preventing these attacks requires parameterized queries and prepared statements, which separate query structure from user-supplied data. Similarly, XSS, where attackers inject malicious scripts into webpages, can be mitigated through input validation and proper output encoding to prevent untrusted data from being executed as code.

Security misconfigurations, another common risk, often stem from default settings, overly permissive permissions, and unpatched software. Regularly updating dependencies, removing unnecessary features, and applying the principle of least privilege significantly reduce exposure to attacks.

Without HTTPS (SSL/TLS), sensitive data is exposed to potential interception, making encryption a non-negotiable security standard for web applications. Secure transport prevents attackers from intercepting or modifying data in transit, reducing the likelihood of session hijacking and man-in-the-middle attacks.

Implementing Content Security Policy (CSP) restricts the sources from which a webpage can load scripts, images, and stylesheets, minimizing the risk of malicious content execution.

Access controls also play a major role in application security. Role-based access control (RBAC) and attribute-based access control (ABAC) restrict user permissions based on predefined rules, preventing unauthorized users from accessing sensitive info or performing restricted actions.

Implementing multi-layered authentication and authorization mechanisms further strengthens security so that only legitimate users can access protected areas.

Advanced Authentication Methods

Weak authentication mechanisms leave web applications vulnerable to account takeovers and other forms of data breaches.

Relying solely on passwords is no longer an effective security strategy, as credential stuffing attacks and phishing attempts continue to rise and become more sophisticated. Implementing advanced authentication techniques significantly improves account security.

Multi-Factor authentication or MFA mitigates security risks by enforcing an additional authentication step ensuring only authorized users gain access. Some of the more common methods include one-time codes sent via SMS or email, authenticator apps, and hardware security keys.

OAuth2 and Single Sign-On can help simplify authentication while improving security. OAuth2 streamlines authentication by letting users log in via trusted providers, reducing password fatigue and improving security at the same time. With SSO, users log in a single time to gain access to multiple systems, streamlining authentication without compromising security.

Biometric authentication, through fingerprint scanning, facial recognition, or voice identification, adds an extra layer of security by verifying unique user traits. Modern devices incorporate these authentication methods to streamline user verification, reducing reliance on passwords while maintaining strong security standards.

Many platforms now support WebAuthn, allowing users to authenticate without entering a password at all. Authentication enhanced with AI adapts security measures based on user behavior, analyzing factors such as device type, login location, and historical activity to assess different risk levels.

If an attempt appears suspicious, the system may trigger additional security steps, such as requesting a secondary verification method.

Handling User Data Responsibly

Protecting sensitive user data is both a core legal obligation and a fundamental component of maintaining trust with the users of your web application.

Various regulatory frameworks such as GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act), and PCI DSS (Payment Card Industry Data Security Standard) enforce strict guidelines on collecting, storing, and processing personal and financial data. Failure to comply can result in fines, legal penalties, and operational restrictions.

Data minimization reduces exposure to breaches by collecting and storing only the data necessary for application functionality. Avoiding excessive data collection limits the potential impact of security incidents.

Anonymization and pseudonymization techniques further protect sensitive information by stripping or masking identifiable details, making it more difficult for attackers to exploit stolen data. Even if attackers gain access to an organization’s systems, encryption keeps critical data protected and unreadable.

Data in transit must always be protected using TLS encryption, preventing interception during transmission. With encryption at rest, sensitive database information remains protected, as decryption keys are required to make any stolen data usable.

Organizations must enforce strict access controls across internal systems to safeguard sensitive data from unauthorized users. Limiting database access based on roles and permissions prevents employees or services from retrieving more data than necessary.

Logging and monitoring data access events help detect unusual activity, allowing organizations to respond to potential threats before they escalate.

Penetration Testing & Security Audits

Penetration testing (pen testing) proactively exposes system weaknesses, allowing security teams to strengthen defenses before cybercriminals strike.

Ethical hackers attempt to bypass security controls, identifying gaps that developers may have overlooked. These tests provide valuable insights into application resilience and help strengthen defenses.

Security audits complement penetration testing by conducting comprehensive reviews of code, configurations, and access policies. The usage of automated scanning tools combined with manual code reviews can help detect vulnerabilities that may otherwise be overlooked during standard development.

These audits should be conducted regularly, particularly after major updates or when infrastructure changes.

With a Security Information and Event Management (SIEM) system, organizations can monitor log data across systems, detecting anomalies that may signal security incidents. SIEM tools collect and analyze log data from various sources, detecting suspicious patterns that could indicate security threats.

Repeated failed login attempts and unauthorized access attempts generate security alerts, prompting the need for further investigation. Organizations can gain deeper visibility into potential threats and can take immediate action to mitigate risks by monitoring security events in real time.

Regular testing and continuous monitoring strengthen application security by identifying risks before they turn into full-scale attacks. Web applications must grow and shift alongside any emerging threats, adapting security strategies to protect against the latest and most damaging attack methods.

Through elements such as pen testing, security audits, and real-time monitoring, businesses can mitigate the possible exposure to cyber threats and improve their overall resilience.

04

Chapter 04

AI & Machine Learning Integration In Web Applications

Artificial intelligence and machine learning are transforming how web applications operate, providing personalized experiences, automating complex tasks, and delivering data-driven insights.

Businesses use AI to improve customer interactions, detect fraudulent activities, and predict user behavior, leading to more efficient operations and informing better organizational decision-making. From recommendation engines to the implementation of conversational AI, these technologies work to enhance engagement while streamlining backend processes.

Web applications that incorporate AI gain an advantage in responsiveness, efficiency, and adaptability. Predictive analytics helps businesses anticipate trends, while AI-driven automation reduces manual workloads.

Integrating machine learning into web applications requires thoughtful implementation, balancing technical considerations with ethical responsibilities such as data privacy and bias reduction.

How AI & ML Enhance Web Application Functionality

With artificial intelligence, web applications can continuously learn from data, refining their insights and automating personalized experiences.

Businesses are increasingly relying on these capabilities to improve their user engagement, detect security threats, and optimize decision-making processes. Machine learning models continuously refine their accuracy, learning from user interactions to provide more relevant content and recommendations.

Personalization plays an important part in making the user experience better. In modern web applications, AI algorithms are able to analyze browsing habits, purchase history, and interaction patterns to deliver customized content.

As an example, Streaming platforms like Netflix leverage recommendation engines that analyze past viewing habits to personalize content suggestions, boosting user engagement and retention. As with streaming services, e-commerce sites analyze browsing and purchase history to display personalized product suggestions.

Web security relies on AI for fraud detection, using predictive analytics to identify high-risk behaviors and prevent potential cyber threats. This is demonstrated when machine learning algorithms monitor transaction patterns, identifying any anomalies that indicate potential fraud.

Financial institutions and online retailers use these models to flag suspicious activity, reducing the risks that are commonly associated with unauthorized transactions. Unlike more traditional rule-based systems, AI is able to adapt to emerging fraud tactics, strengthening an organization’s security over time.

Through advanced data analysis, predictive analytics helps businesses stay ahead by anticipating customer expectations and optimizing workflows. AI-driven data analysis helps companies detect historical trends and translate them into strategic advantages.

Online retailers use demand forecasting to manage inventory, while service-based businesses analyze customer behavior to improve engagement strategies. The AI-based insights that are gleaned can help enhance efficiency by getting rid of guesswork while also allowing for more proactive adjustments to be made.

With AI-driven automation, businesses can deploy chatbots and virtual assistants to handle routine inquiries, reducing wait times and enhancing customer service. Unlike traditional customer service workflows that rely solely on human agents, AI chatbots scale effortlessly, handling multiple interactions simultaneously.

Choosing The Right ML Models For Your Application

Different machine learning models excel in different scenarios, making it essential to match the model to both the dataset and the application.

Machine learning models fall into two main categories: supervised learning and unsupervised learning. Having a clear understanding of these distinctions allows businesses to make informed decisions and implement the best solution for their needs.

In supervised learning, models analyze labeled datasets, using known inputs and outputs to refine their predictions. Supervised learning enables applications such as fraud detection and spam filtering, with models growing more accurate as they analyze more labeled data.

Unsupervised learning identifies patterns in datasets without predefined labels. Clustering algorithms detect similarities within datasets, supporting applications such as market segmentation, fraud prevention, and recommendation personalization.

Unlike supervised models, unsupervised learning uncovers hidden patterns in large datasets, often revealing insights that were not immediately obvious. There are several factors that influence the effectiveness of a machine learning model:

- Data quality is a major consideration, as inaccurate or biased data leads to flawed predictions.

- Feature selection, or determining which attributes contribute most to the model’s accuracy, impacts performance as well. Models trained on irrelevant or excessive features tend to overfit, reducing their ability to generalize new data.

- Computational demands scale with model complexity, influencing hardware needs and training efficiency. Deep learning architectures, such as neural networks, demand significant processing power, often requiring GPUs or cloud-based resources.

Lighter models, such as decision trees or logistic regression, perform well on smaller datasets without consuming excessive computing resources. Businesses must carefully consider and assess their current infrastructure capabilities when choosing between lightweight models and more computationally intensive approaches.

Proper utilization of a machine learning model involves a good degree of ongoing monitoring and refinement, and metrics including F1-score, mean squared error, and AUC-ROC help assess model performance and identify areas for improvement.

To maintain relevance and precision, AI-driven web applications must retrain their models as new data emerges, refining their outputs over time.

Implementing Chatbots & Intelligent Assistants

Chatbots and AI-driven assistants streamline customer interactions by automating responses to common inquiries. Businesses use these tools to reduce wait times, improve user engagement, and provide 24/7 support without requiring additional human resources.

Unlike AI-driven bots, rule-based chatbots rely on scripted pathways and keyword matching to handle conversations. These systems perform well with predefined queries but lack the flexibility to handle intricate or unstructured discussions.

AI-powered chatbots, in contrast, use natural language processing or NLP to understand context and intent, allowing for much more dynamic conversations. Online retailers use them to enhance the shopping experience, providing recommendations, order updates, and personalized deals.

Customer service departments integrate chatbots to handle frequently asked questions, cutting down on the workload of human agents. With conversational AI, businesses can automate lead qualification so that potential customers receive the right information at the right stage of the sales funnel.

There are several best practices to follow that can help improve chatbot performance and usability.

Training AI chatbots on diverse datasets helps them better understand a broad range of user inputs, reducing the likelihood of misinterpretations. Providing potential fallback options, such as transferring the conversation to a live agent when necessary, prevents frustration when chatbots encounter complex requests.

Conversational design plays a major role in chatbot success, and structuring dialogues to feel natural, using concise responses, and maintaining a consistent tone make user interactions better than before. Chatbots should acknowledge user input before responding, reinforcing engagement.

Data privacy considerations are incredibly important when implementing AI chatbots. Systems that collect user data must comply with regulatory standards and avoid storing sensitive information unnecessarily.

Having transparency in chatbot interactions, including clearly indicating when users are engaging with an AI system, helps build up that much-needed trust.

Managing Data For AI Integration

Machine learning models rely on structured data pipelines that collect, store, and process information before generating insights. Effective data management directly influences AI performance, impacting accuracy, speed, and reliability.

Storage solutions vary depending on the scale of the data being processed. Relational databases handle structured information efficiently, while NoSQL databases support large-scale, unstructured data storage. Data lakes provide flexible storage for raw information, allowing applications to process and analyze datasets as needed.

Preprocessing steps improve data quality before it enters a machine learning model. Refining datasets through deduplication, consistency checks, and missing data handling actively strengthens the accuracy of machine learning models. Transforming unprocessed data into well-defined features allows models to learn more effectively and generate better insights.

AI systems use batch processing for periodic data aggregation and real-time processing for applications requiring immediate reactions.

For tasks that don’t require immediate results, batch processing provides an efficient way to analyze trends and compile detailed reports. With real-time data analysis, applications can detect fraud instantly, personalize user experiences, and provide continuous monitoring without delays.

As AI becomes more prevalent in web applications, developers must address ethical challenges such as bias, consent, and responsible data usage.

If training data is skewed, AI systems may reinforce biases, emphasizing the need for careful dataset auditing and balancing. AI models that influence hiring decisions, credit scoring, or legal outcomes must undergo rigorous testing to prevent unintended biases.

Transparency improves user trust in AI-driven systems. Actions, such as providing explanations for AI-generated recommendations, allowing users to review decision-making criteria, and maintaining accountability for automated processes, help build credibility.

Maintaining ongoing compliance with data protection regulations, such as GDPR and CCPA, further reinforces responsible AI integration.

The role of artificial intelligence in web applications continues to expand over time, actively shaping how businesses manage their operations. The strategic implementation of AI and machine learning enhances efficiency, strengthens security, and delivers more personalized user experiences.

Applications that prioritize data integrity, performance optimization, and ethical considerations stand to benefit from AI’s changing capabilities by making them far more appealing to consumers.

05

Chapter 05

Web Application Performance Optimization Techniques

Fast-loading web applications enhance user experience, improve search rankings, and boost overall system efficiency. In contrast, slow performance increases bounce rates and reduces engagement, making optimization essential for both developers and businesses.

Performance optimization spans multiple aspects of an application, from front-end rendering to back-end data retrieval. Implementing efficient caching mechanisms, utilizing CDNs, and taking advantage of strategic resource-loading techniques enables applications to respond quickly to user interactions.

Additionally, optimizing JavaScript execution, refining database queries, and minimizing unnecessary processing can help further improve responsiveness.

Improving Load Times With Caching & CDN

Web applications leverage caching and CDNs to improve responsiveness, reducing the time it takes users to access content. Both techniques minimize the required time to retrieve and display content by storing frequently accessed data closer to users.

A content delivery network distributes assets such as images, stylesheets, and scripts throughout geographically dispersed servers.

Instead of fetching resources from a single origin server, users receive content from the nearest CDN node, reducing network latency. With this setup, load times are improved for global audiences while preventing bottlenecks by distributing traffic more efficiently.

Caching mechanisms can further increase app performance by reducing redundant data processing.

Browser caching allows frequently used assets to be stored locally, preventing the need to reload them on every page visit. Proper cache expiration settings strike a balance between delivering up-to-date content and maintaining optimal performance. Server-side caching enhances scalability by decreasing database load and allowing applications to handle more requests efficiently.

Frequently requested API responses, database query results, and rendered HTML pages can be stored in memory using Redis, Memcached, or similar tools, reducing backend processing time. For high-traffic applications, microcaching stores dynamic content for short durations, improving response times without displaying outdated information.

Efficient caching strategies can streamline development, lower the strain on infrastructure, cut down on bandwidth consumption, and provide a smoother browsing experience to the end user.

Using efficient caching at both the server and browser levels maximizes web application speed while also supporting high user demand.

Orases modernized Community Products’ manufacturing systems through a new Engineering Module that reduced technical debt, improved efficiency, and expanded their talent pool.

Code Splitting & Lazy Loading For Faster Load Times

Large JavaScript bundles can increase page load times, delaying interactivity and frustrating users as a result.

Applications that rely on excessive JavaScript execution often become sluggish, particularly on mobile devices with limited processing power. Code splitting and lazy loading reduce these inefficiencies by loading resources when required instead of all at once.

Code splitting divides JavaScript into smaller chunks rather than delivering a single large file to users. Dividing code into logical sections ensures applications load only the essential scripts for each page, cutting down on unnecessary processing and improving load speed.

JavaScript bundlers such as Webpack and Rollup make code splitting effortless, helping developers optimize performance without extra effort.

Lazy loading conserves resources by retrieving content only when it’s needed, reducing unnecessary data transfers. Images, videos, and JavaScript components don’t need to be loaded all at once, especially for content that is not visible immediately.

Instead, elements load as the user scrolls down the page or interacts with specific features, reducing the initial payload and allowing the most important content to appear faster.

Combining lazy loading with efficient asset management improves overall responsiveness. Images can be served in next-generation formats like WebP, lowering the size of files without ruining their quality. Scripts and stylesheets can be conditionally loaded based on viewport size and device type, preventing unnecessary requests.

Modern front-end frameworks, including React, Vue, and Angular, provide built-in support for lazy loading and dynamic imports. Together, these techniques optimize the rendering pipeline, lowering blocking operations while improving perceived performance.

Orases transformed Worst Pills, Best Pills’ outdated website into a modern, mobile-friendly platform that increased subscriptions and improved access to vital drug information.

Optimizing Front-End & Back-End Performance

Performance optimization requires attention to both client-side execution and server-side efficiency.

While front-end improvements focus on reducing rendering delays and JavaScript execution time, back-end optimizations enhance data retrieval, API performance, and server response times. JavaScript execution on the main thread often leads to sluggish interfaces, particularly when large scripts block user interactions.

Minimizing main-thread execution by deferring non-essential scripts, offloading tasks to Web Workers, and optimizing event listeners prevents unnecessary delays. Long-running operations should be broken into smaller tasks using requestIdleCallback or async processing techniques.

Efficient API design significantly improves back-end performance, and lowering API response times through caching, database indexing, and optimized queries lowers the time it takes to fetch data. Implementing pagination and selective data retrieval prevents excessive payloads, delivering only the necessary information to the client.

Database performance plays an important role in application speed, and indexing frequently queried fields, optimizing joins, and avoiding unnecessary computations lower database query execution time. Applications that rely heavily on database queries benefit from connection pooling and query caching, which store common results and reduce load on the database server.

The dynamic scaling of infrastructure prevents performance bottlenecks under heavy traffic. Load balancing applications optimize resource usage by directing traffic to the least busy server, maintaining system efficiency.

Applications that are designed with asynchronous processing can handle background tasks more efficiently, preventing delays caused by blocking operations.

A well-optimized front-end reduces unnecessary processing, while an efficient back-end allows for fast data delivery, creating an optimal web experience. A combination of caching, efficient resource management, and streamlined server operations lessens unnecessary processing to improve both speed and reliability.

06

Chapter 06

Frequently Asked Questions

What Is The Difference Between A Progressive Web Application (PWA) & A Traditional Web Application?

A progressive web application (PWA) blends the capabilities of a traditional web application with features typically found in native mobile apps. Traditional web applications require an internet connection to function, whereas PWAs leverage service workers to enable offline access, caching content for improved performance.

With home screen shortcuts, instant notifications, and efficient background synchronization, PWAs function similarly to native apps while remaining web-based.

Traditional web applications operate solely through a browser, lacking offline functionality and deeper integration with device features. Businesses adopting PWAs reduce reliance on app stores, allowing users to access their applications directly without requiring downloads or updates.

Another distinction is found in performance and speed. PWAs load faster due to caching mechanisms that store resources locally, reducing the need for repeated network requests.

Unlike modern web technologies, traditional web applications refresh entire pages, resulting in slower interactions and increased loading times. From a development perspective, PWAs simplify maintenance since a single codebase manages a variety of platforms.

Traditional applications often require separate mobile and desktop versions, increasing development time and complexity. Businesses looking for cost-effective, scalable, and engaging solutions increasingly favor PWAs over conventional web applications.

How Does Serverless Architecture Impact The Cost & Scalability Of Web Applications?

Serverless architecture changes how applications are hosted and scaled, shifting infrastructure management to cloud providers. Unlike traditional server-based deployments, where businesses must provision and maintain servers, serverless computing allows applications to run on demand without direct infrastructure oversight.

Cost savings is an important benefit, as traditional servers operate continuously, incurring costs even during periods of low activity. Serverless models adopt pay-as-you-go pricing, meaning businesses only pay for actual compute time.

If an application receives no traffic, no compute charges apply, significantly reducing expenses for applications with unpredictable usage patterns.

Scalability improves since serverless functions automatically adjust to demand. Traditional architectures require manual scaling or load balancing to handle traffic spikes, often leading to over-provisioning of resources.

Serverless environments allocate resources dynamically, preventing bottlenecks without the need for human intervention. With its ability to scale dynamically, going serverless is perfect for event-driven tasks, including instant notifications, API calls, and scheduled jobs.

Despite the benefits, cold starts present a challenge. If a function remains idle for too long, invoking it requires the cloud provider to create a fresh instance, adding latency. Applications requiring consistently low latency, such as financial trading platforms, may need to use provisioned concurrency to mitigate this issue.

Security considerations also differ from traditional deployments. Serverless applications rely heavily on third-party cloud providers, requiring careful configuration of access controls, authentication mechanisms, and API security to prevent unauthorized access.

Businesses adopting serverless architecture gain flexibility and cost savings but must also carefully evaluate whether the operational model aligns with their application’s performance and security needs.

What Are The Security Risks Associated With Integrating AI & Machine Learning Into A Web Application?

AI and machine learning improve web applications by automating decision-making, detecting anomalies, and personalizing user experiences. While these technologies enhance functionality, they also introduce security risks that must be mitigated to protect data integrity and user privacy.

A major concern is data privacy and regulatory compliance, as AI models require vast amounts of data to make them more accurate and refined.

If improperly handled, personally identifiable information (PII) may be exposed, leading to regulatory violations under laws such as GDPR and CCPA. To safeguard sensitive data, businesses must enforce data anonymization techniques, implement power encryption, and maintain strict access controls.

Bias in AI decision-making presents another challenge for developers to overcome. If a machine learning model learns from biased or unbalanced data, it may amplify inequalities and produce discriminatory results.

Various solutions, such as AI-driven hiring tools, credit-scoring applications, and facial recognition systems, have faced scrutiny for reinforcing racial, gender, or socioeconomic biases due to flawed training data. Companies can help mitigate these risks by implementing regular audits, diverse training datasets, and fairness checks.

Model security poses a significant challenge, as attackers can manipulate inputs with subtle distortions, leading AI systems to generate inaccurate conclusions.

Attackers could exploit potential vulnerabilities in image recognition, spam filters, or fraud detection systems, bypassing security measures undetected. Techniques such as adversarial training, input validation, and model monitoring help further strengthen AI security.

API security also becomes more complex over time, as many AI-driven web applications rely on third-party AI models and APIs for natural language processing, image recognition, or analytics.

Using unsecured API endpoints in your operations may expose sensitive data or allow unauthorized access, making rate limiting, authentication tokens, and encrypted communications necessary to prevent potential misuse.

AI models also require continuous monitoring to detect drift, where model accuracy degrades over time due to changing data patterns. Implementing real-time logging, anomaly detection, and automated retraining mechanisms prevents outdated models from making incorrect or insecure predictions.

While AI enhances automation and decision-making, security risks must be addressed through data protection, model validation, and API security measures to prevent vulnerabilities from being exploited by bad actors.

What Are Some Challenges Of Mobile-First Design In Web Applications?

Designing web applications with a mobile-first approach prioritizes usability on smartphones and tablets before adapting the experience for desktops. While this approach improves accessibility and aligns with Google’s mobile-first indexing, it presents several challenges in development and performance optimization.

Limited screen size requires taking a thoughtful approach to UI/UX design. Content must be structured for smaller displays, meaning unnecessary elements should be removed to avoid clutter. Prioritizing essential content while maintaining readability and touch-friendly interactions becomes more difficult as applications scale in complexity.

Performance optimization presents another challenge. Due to the limited processing power of mobile devices, optimizing resource loading, compressing images, and streamlining JavaScript execution are crucial for performance.

Applications that are not optimized for mobile performance experience slow load times, which increase bounce rates and reduce conversions. Network variability introduces additional constraints. Unlike desktops with stable high-speed connections, mobile users frequently switch between Wi-Fi, 4G, and 5G networks, sometimes encountering low-bandwidth conditions.

PWAs address this challenge head-on by using service workers for offline caching, but traditional mobile-first applications must optimize lazy loading, asynchronous requests, and content delivery strategies to maintain usability in low-connectivity environments.

Responsive design relies on adaptable elements such as fluid grids, but achieving consistency across diverse devices and operating systems demands extensive testing. Screen fragmentation across iOS and Android devices requires comprehensive testing to guarantee consistency.

Input methods also differ on mobile devices since touchscreens require larger tap targets and gesture-based interactions, which can conflict with more traditional desktop navigation patterns.

Implementing adaptive design elements, such as collapsible menus and swipe gestures, improves usability but requires careful testing to prevent unintended interactions.

Balancing mobile-first optimization with desktop functionality remains a challenge. Applications designed primarily for mobile users must still provide an intuitive experience on larger screens without feeling stretched or unoptimized. Flexible design principles, scalable UI components, and progressive enhancement strategies help accommodate users across different devices.

Despite these challenges, a mobile-first approach improves accessibility, search visibility, and user engagement when executed effectively. Applications designed with mobile users in mind from the outset provide better performance, increased retention, and a seamless experience across multiple platforms.

Get Started With Advanced Web Application Development

Building high-performing web applications requires a strategic approach that considers scalability, security, and user experience. The demand for seamless, fast, and intelligent applications continues to grow as businesses seek better ways to engage users, optimize workflows, and maintain a competitive edge.

Modern development strategies, including the use of PWAs, serverless computing, microservices, AI integration, and performance optimization, allow companies to create more flexible, efficient, and future-proof applications.

Having a strong foundation in web architecture directly impacts business growth. Applications that load quickly, function across an array of different devices, and provide more personalized experiences attract and retain users more effectively.

Certain security considerations, such as advanced authentication methods and regular penetration testing, serve to safeguard sensitive data and build that vital trust with customers. Scalable back-end solutions, whether through serverless computing or microservices, provide flexibility for handling possible traffic surges and shifting business needs.

Adopting these advanced strategies can help improve technical performance while also enhancing your company’s business efficiency as a whole.

Automating customer interactions with AI-driven chatbots, leveraging predictive analytics for smarter decision-making, and structuring applications to handle growth without performance degradation provide long-term advantages.

Companies that choose to invest in modern web development techniques position themselves ahead of competitors that rely on outdated methods. A strong grasp of development methodologies, security frameworks, and architectural design is essential for executing these strategies effectively.

Many businesses struggle with balancing performance optimization, security, and scalability while keeping up with emerging technologies. However, businesses that rely on expert guidance can bypass unnecessary expenses and transition to modern solutions faster.

At Orases, we specialize in designing, securing, and optimizing web applications using industry-leading methodologies. From custom web development and infrastructure design to advanced security solutions, our team provides customized strategies that align with your business objectives.

Take the Next Step Toward an Optimized Web Presence

Businesses that leverage modern web application development techniques improve user satisfaction, cut down on operational costs, and enhance security resilience. Investing in performance improvements, scalable infrastructure, and AI-powered automation leads to long-term efficiency and better customer engagement.

Let’s discuss how Orases can help your business implement advanced development strategies. Set up a consultation today to learn more about our customized solutions designed to improve performance, security, and scalability. Call 1.301.756.5527 or reach out online to get started.

"*" indicates required fields